Exercises

And that’s all we will cover with scikit-learn. I urge you to check out the official documentation and explore all the hidden treasures offered by scikit-learn for yourself. Hopefully all the training on functions and OOP from the first week will help you easily understand the documentation!

Now, here are some exercises for you to play around with scikit-learn a bit more!

Many thanks to the following people for contributing to these exercises: Joe Stacey

Predicting the width of Iris petals based on the length of the petals

We will start the exercise with the following code. Our aim is to predict the petal width of Iris flowers based on the petal length.

[Josiah’s question: What is this task? A classification task? A regression task?]

Please note that the code below splits our data into a train and test split in 80/20 proportions.

import sklearn as sklearn

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn import tree

from sklearn import metrics

from sklearn import linear_model

from sklearn.model_selection import GridSearchCV

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

data = load_iris()

df = pd.DataFrame(data.data, columns=data.feature_names)

test_proportion = 0.2

training_data = df[['petal length (cm)']]

observations = df[['petal width (cm)']]

x_train, x_test, y_train, y_test = train_test_split(training_data,

observations,

test_size=test_proportion,

random_state=42)

Exercise 1: Instead of a 80/20 split, we want three sets (train/dev/test) with 80/10/10 split.

[Josiah says: A dev set is usually used to select hyperparameters, to make sure that you do not overfit the test set. You will cover this in more detail in Week 4 of the Introduction to Machine Learning course]

- Add an additional line of code below the code above to implement this split

- Set any random_state variables to 42

Exercise 2: Train a decision tree model on our training data

- Note: You should not be using

DecisionTreeClassifieras in our earlier tutorial because this is not a classification problem! What should you use instead?

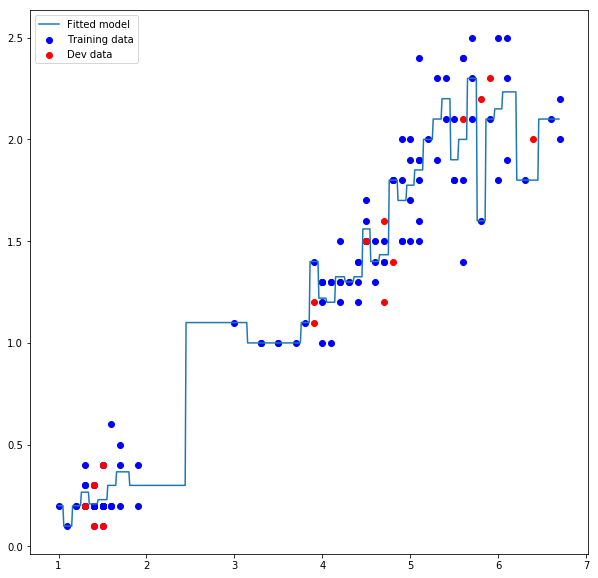

Exercise 3: Using matplotlib, plot the training set as blue points, the dev set as red points, and the fitted model as a blue line

- This will produce the diagram below:

Exercise 4: You might notice that the model appears to overfit the training data that was used to train the model. Check this by finding the Mean Squared Error of both the training predictions and the dev predictions. Use sklearn.metrics to help.

Exercise 5: As it looks like the model is indeed overfitting the data, try now to fit a linear regression model to the training data.

- Does the MSE improve over the training set?

- Does the MSE improve over our dev set?

Exercise 6: We will now try to improve the decision tree by changing the max tree depth. Use GridSearchCV to test the different possible max_depth values that our decision tree could use.

- Output the full results of the grid search to find the best

max_depthparameter

Exercise 7: If we train our decision tree model now with the best max_depth parameter from our grid search, does the model now outperform the regression model?

- How does performance compare across the train, dev and test sets?