Classification

For this module, I will only discuss scikit-learn with regards to the classification task.

As I mentioned in the Introduction to Machine Learning course, classification is one of the most common machine learning task. It is also often tackled in a supervised setting, and we will assume this setting for this module.

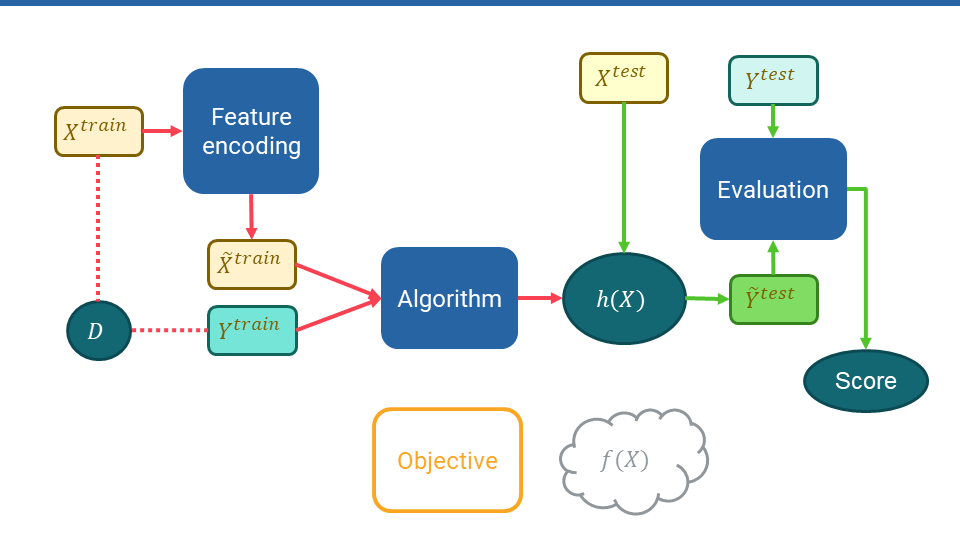

As a quick reminder, an ML model (hypothesis) takes as input a feature vector \(X\) and outputs a predicted label \(\hat{y}\).

In classification, \(\hat{y}\) is a categorical or discrete variable (e.g. “yes”, “no”, “cat”, “car”, “class 1”).

At training time, a supervised learning model takes as input a sequence of \(N\) feature vectors \(\mathbf{X} = \{X^{(i)}\}^N\) and the correct (gold standard/ground truth) labels \(\mathbf{y} = \{y^{(i)}\}^N\) for each of these \(N\) samples.

The algorithm will then try to fit the model to \(\mathbf{X}\) and \(\mathbf{y}\).

At test time, you will take some previously unseen data \(\mathbf{X^{test}}\) and predict the output labels \(\mathbf{\hat{y}^{test}}\) for these.

You then evaluate your model by comparing \(\mathbf{\hat{y}^{test}}\) against the gold standard/ground truth labels \(\mathbf{y^{test}}\).

Here is a diagram of the pipeline from the Introduction to Machine Learning course. You have set this as your wallpaper or have it framed up in your room, haven’t you? 🥺

In scikit-learn, the process is exactly the same:

- Arrange data into \(\mathbf{X}\) and \(\mathbf{y}\)

- Choose your model

- Initialise your model with some hyperparameters

- Fit your model to \(\mathbf{X}\) and \(\mathbf{y}\)

- Predict labels \(\hat{\mathbf{y}}^{test}\) for \(\mathbf{X}^{test}\)

- Evaluate the model performance by comparing \(\hat{\mathbf{y}}^{test}\) against \(\mathbf{y}^{test}\)

I will take you through the whole pipeline. Step by step, we will discuss how to apply scikit-learn to the classification problem.