Evaluation

After fitting our model to training data and performing predictions on the test instances, the final step is to evaluate the performance of our model.

Like I described in the Introduction to Machine Learning course, you will need some kind of evaluation metric to evaluate the performance of your model.

As you might have guessed by now, scikit-learn has implemented a whole set of metrics for you to use off the shelf. The complete list of metrics offered for the classification task is available in the official documentation.

For example, we might choose to evaluate our model with the accuracy metric.

from sklearn.metrics import accuracy_score

print(accuracy_score(y_test, predictions))

## 0.9666666666666667

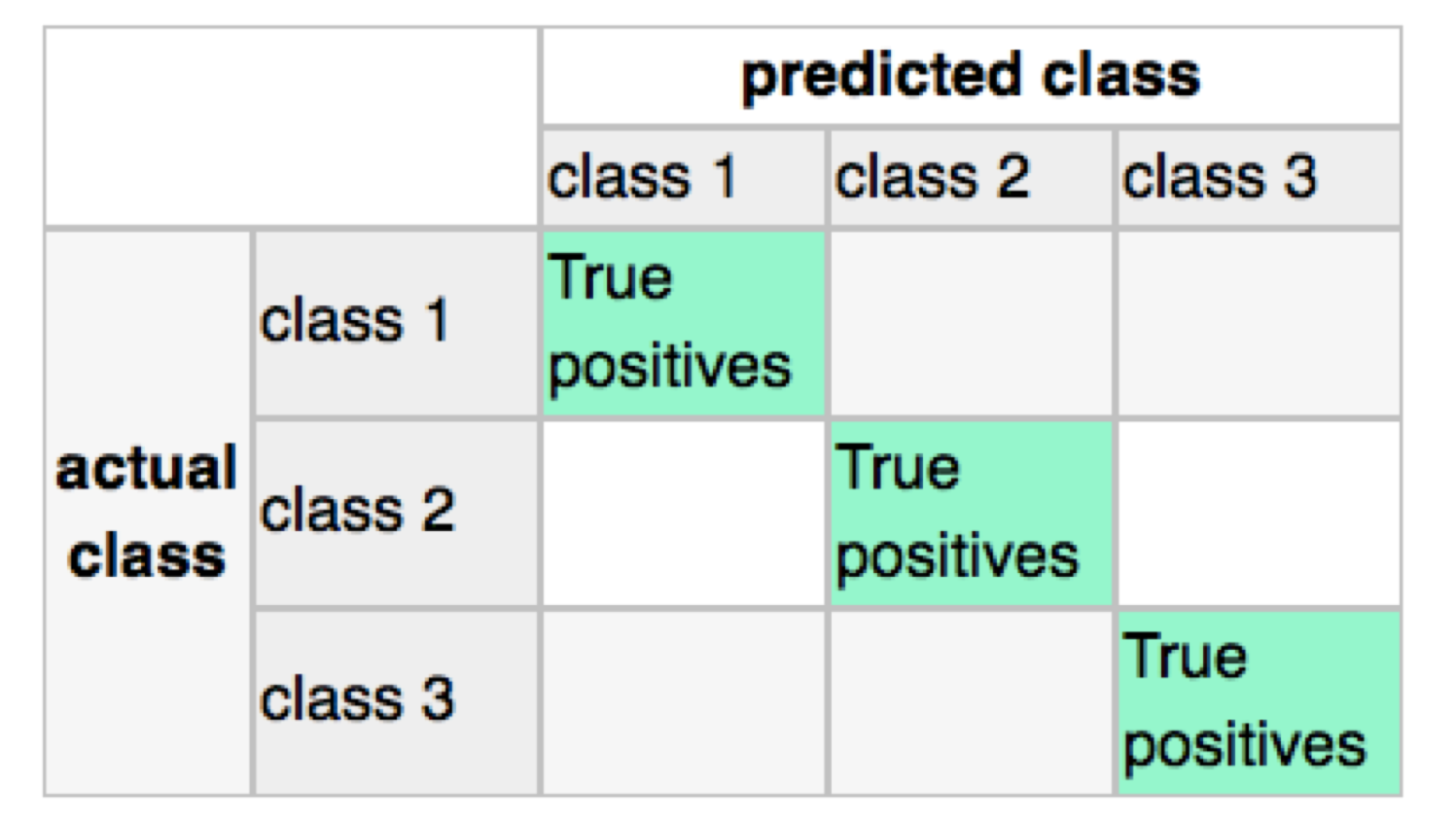

You may also choose to examine the confusion matrix of your predictions vs. ground truth.

from sklearn.metrics import confusion_matrix

print(confusion_matrix(y_test, predictions))

## [[10 0 0]

## [ 1 8 0]

## [ 0 0 11]]

There is also a classificiation_report() function that gives you a summary of the precision/recall/f1-score of your classifier.

from sklearn.metrics import classification_report

print(classification_report(y_test, prediction))

## precision recall f1-score support

##

## 0 0.91 1.00 0.95 10

## 1 1.00 0.89 0.94 9

## 2 1.00 1.00 1.00 11

##

## accuracy 0.97 30

## macro avg 0.97 0.96 0.96 30

## weighted avg 0.97 0.97 0.97 30

We will not discuss the details about different metrics, as these will be covered in your Introduction to Machine Learning course.