Introduction to Deep Learning with PyTorch

Chapter 4: PyTorch for Automatic Gradient Descent

The Secrets of torch Parameters

torch provides very convenient tools to estimate the value of a gradient. The torch Parameter stores the operations

applied to it (such as: multiplication, addition, exp…)

import torch

tensor = torch.Tensor([[1, 2],

[3, 4]])

parameters = torch.nn.Parameter(tensor)

print(parameters)

The code above produces the following output:

Parameter containing:

tensor([[1., 2.],

[3., 4.]], requires_grad=True)

The requires_grad=True indicates that the parameters will keep track of all the operations applied to them, so that the gradients can be computed automatically when needed.

Dynamic Building Graph of Operations

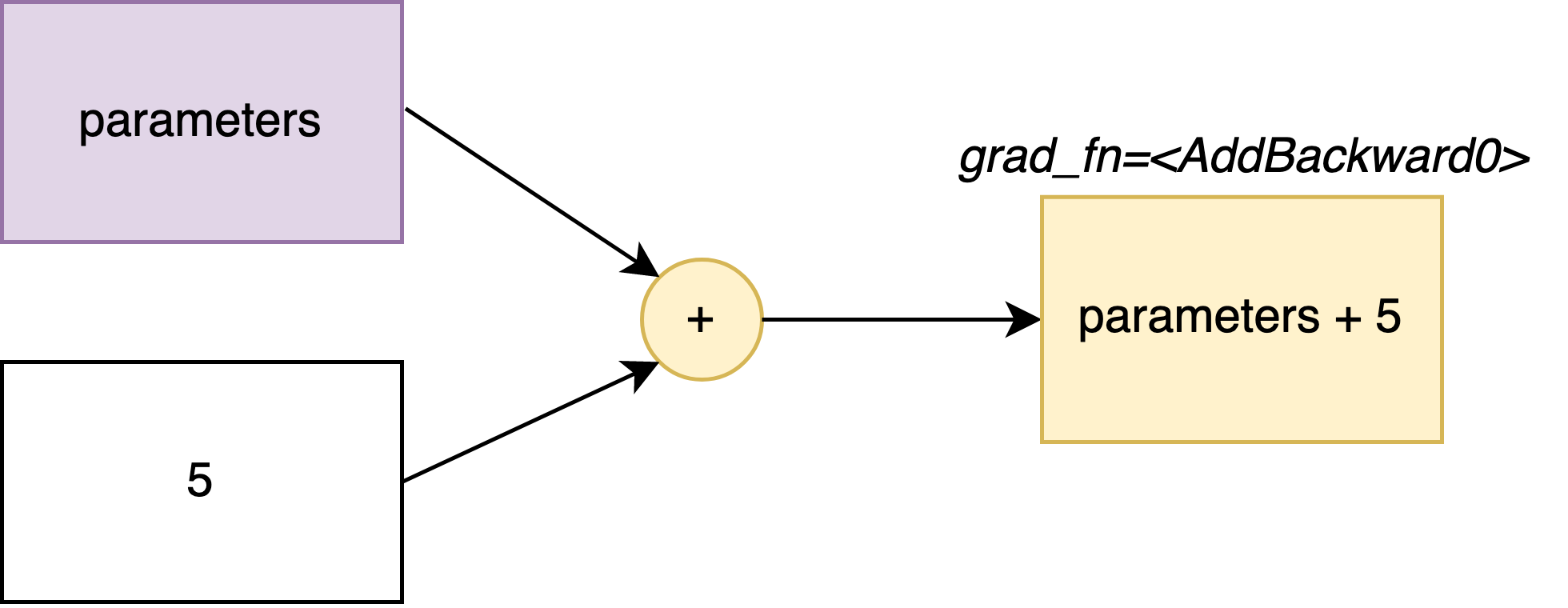

Everytime we perform an operation on a torch Parameter, that operation is recorded in each computed tensor. Those operations are recorded until the loss function.

For example, let’s see what happens after an addition:

import torch

tensor = torch.Tensor([[1, 2],

[3, 4]])

parameters = torch.nn.Parameter(tensor)

temp = parameters + 5

print(temp)

tensor([[6., 7.],

[8., 9.]], grad_fn=<AddBackward0>)

the grad_fn=<AddBackward0> indicates that it remembers the last operation performed on the parameters (in this case:

an addition with 5).

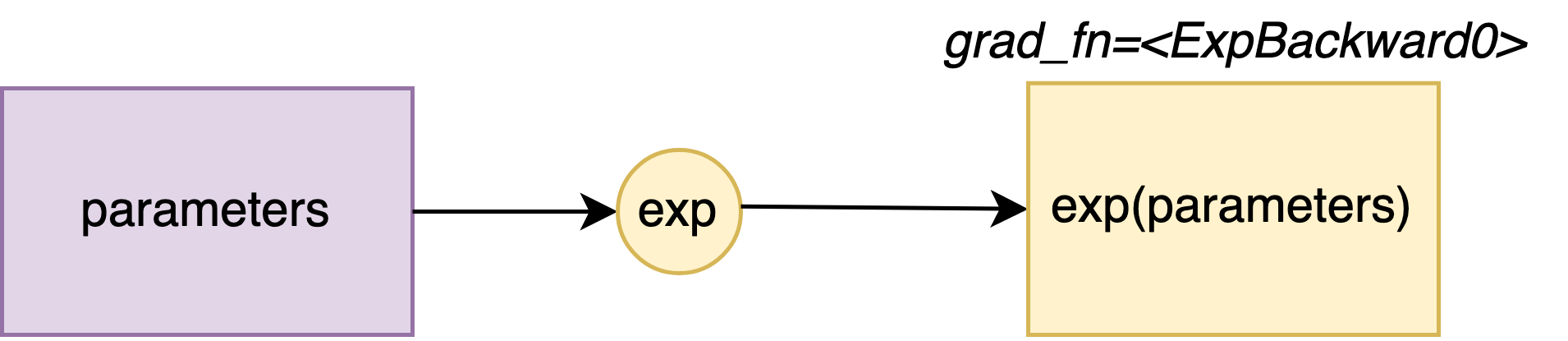

The same phenomenon happens with torch mathematical functions, such as torch.exp

import torch

tensor = torch.Tensor([[1, 2],

[3, 4]])

parameters = torch.nn.Parameter(tensor)

temp = torch.exp(parameters)

print(temp)

tensor([[ 2.7183, 7.3891],

[20.0855, 54.5982]], grad_fn=<ExpBackward0>)