Various Types of Neural Network

In this material, we have mostly focused on Multi-Layer Perceptrons as they provide an intuitive starting point in Deep Leaning.

However, there are many tasks in which they are not the most effective solutions.

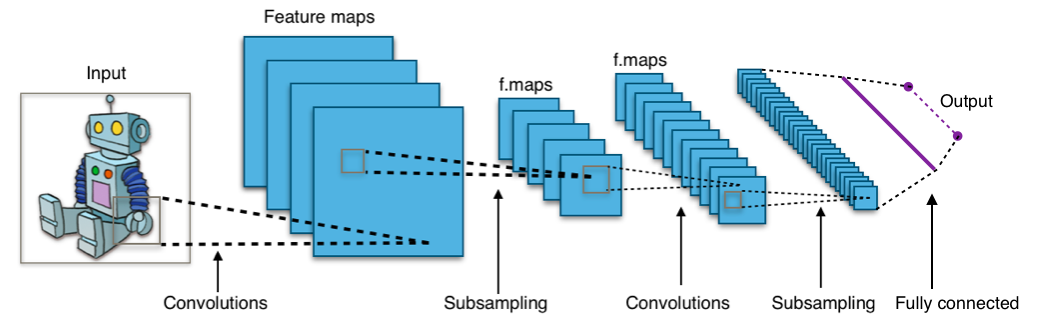

For example, in computer vision tasks, it is preferable to use layers applying convolutions on the images.

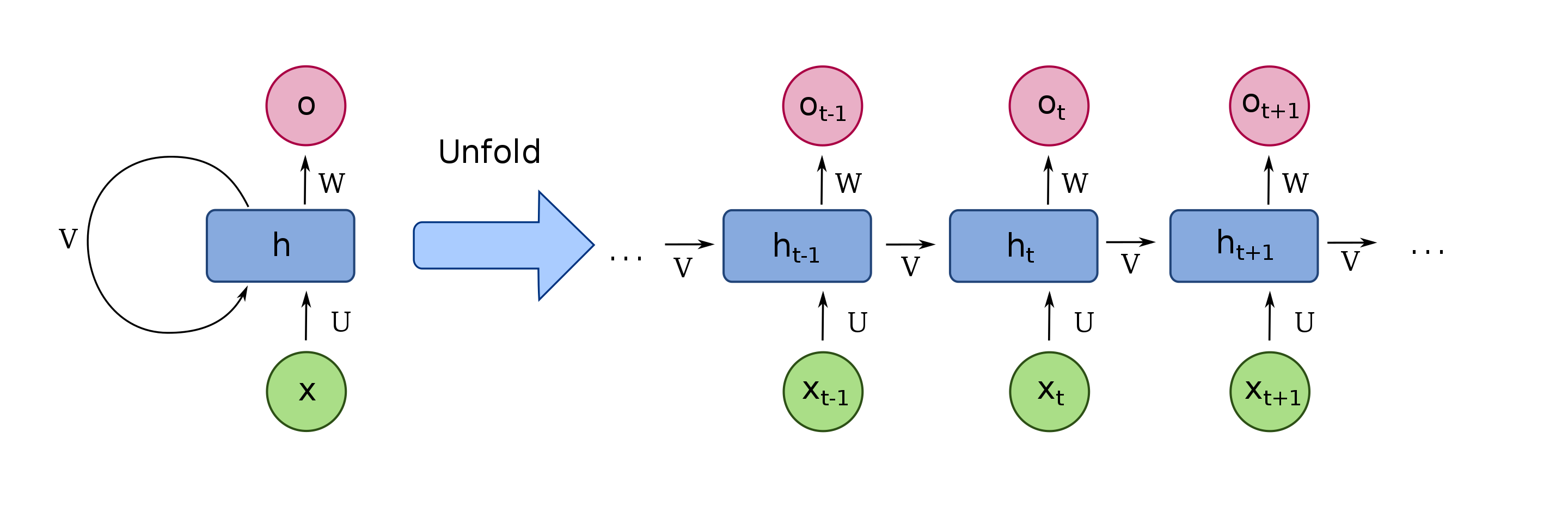

Also, Recurrent Neural Networks (RNN) can be used to process sequences of data. To do so, some of the layers (including the input layers) contain a connection to themselves.

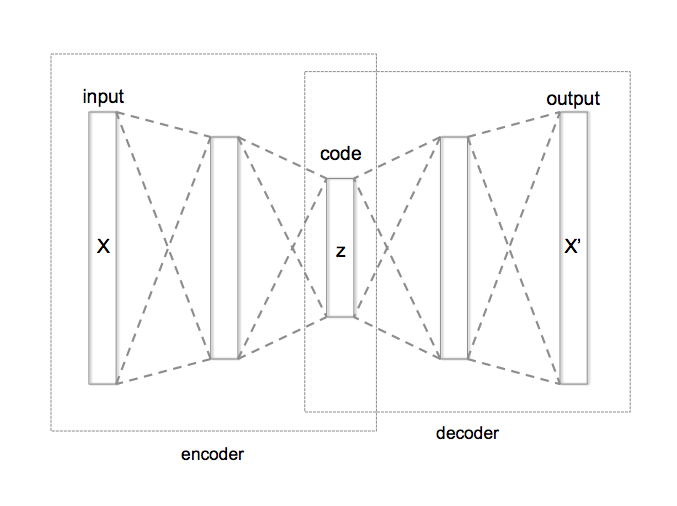

The example shown before corresponds to a classification task. Sometimes, the purpose is not to classify data, but to learn a compressed representation of the data. For example, Auto-encoders can be used to learn a low-level latent representation of some data. They usually contain an encoder \(e_{\theta}\) and a decoder \(d_{\theta}\). For each data \(x\), \(\theta\) is learned by backpropagation to minimise the distance between the original data \(x\) and its reconstruction \(x'\) after going though the encoder and the decoder \(x' = d_{\theta}(e_{\theta}(x))\). In other words, in an autoencoder, we intend to find the optimal \(\theta\) to minimise the distance: \(\| x - x'\|_2\).